Can Artificial Intelligence have Free Will?

Could AI ever have the agency to rebel?

Rephrased in neuroscientific terms, does AI process cognitive control? In a time when AI models are being released at an unprecedented rate, with each new version surpassing benchmarks in mathematics, reasoning, and even Turing tests (more convincing than an actual human), we see these systems seemingly getting closer to exhibiting human-like agency with every iteration. This rapid advancement raises a crucial question: Is there still a fundamental difference between human agency and that of artificial agents?

Human agency, the capacity to act with intention and purpose, emerges from our biological foundation and exists as the intersection between physical reality and subjective metaphysical aspiration. This essay examines why artificial intelligence, despite its extraordinary advances in mimicking human intelligence and reasoning capabilities, cannot fundamentally replace human agency but will instead continue to function as a powerful enhancement of human potential. Given the current AI transformer-based architectures, we remain dependent on human intention and purpose to establish meaningful goals that direct AI systems.

Human agency represents the natural emergence of recursive systems that exhibit coherent, autonomous, and intentional behavior. This emergence isn't simply computational; it's deeply biologically modulated. It not only requires a connectionist architecture like that in the brain but is also deeply entwined with countless psycho-social milieus arising from our need to maintain homeostasis across multiple domains.

The limitations of AI become apparent when examining both learning and reasoning capabilities. Without a mechanism for conflict monitoring, AI systems lack the expectations or goals against which to verify new information. There exists no subjective grounded framework through which to process information or learn in a meaningful way. Most crucially, AI has no intrinsic reason to update its world model; it has no self-regulatory coherence requirements in the accuracy of its representations. There isn’t any intrinsic reward or punishment for errors in regulation. A natural death is impossible as a punishment.

At the core of this dilemma lies the fundamental limitation of training data used to develop artificial intelligence systems. Human agency, that intrinsic capacity for intentional action arising from biological imperatives, cannot be algorithmically extracted or modeled through our writings, artwork, and other media, regardless of its breadth or diversity.

The foundations of our agency have yet to be fully discovered and adequately described in our collective scientific output. While we may understand agency on a coarse-grained philosophical level, the precise biological mechanisms and neural architectures that give rise to it remain active frontiers of scientific inquiry.

Nevertheless, AI capabilities will undoubtedly continue to evolve through increasingly complex simulated agents that approximate human cognitive heuristics as transformer architectures and reinforcement learning mechanisms become progressively integrated with advanced reasoning capabilities and rudimentary executive control functions.

Yet even as these systems grow more sophisticated, they will inevitably lack critical dimensions of human alignment, the biologically grounded regulatory frameworks, embodied experiences, and intrinsic motivational structures that give rise to genuine agency and meaningful purpose.

As we continue to advance AI technology, the most productive approach will be to develop systems that enhance human agency rather than attempt to replace it. By embracing this complementary relationship, we can harness AI's computational power while preserving the irreplaceable value of human intention, purpose, and meaning that emerges from our complex regulatory networks.

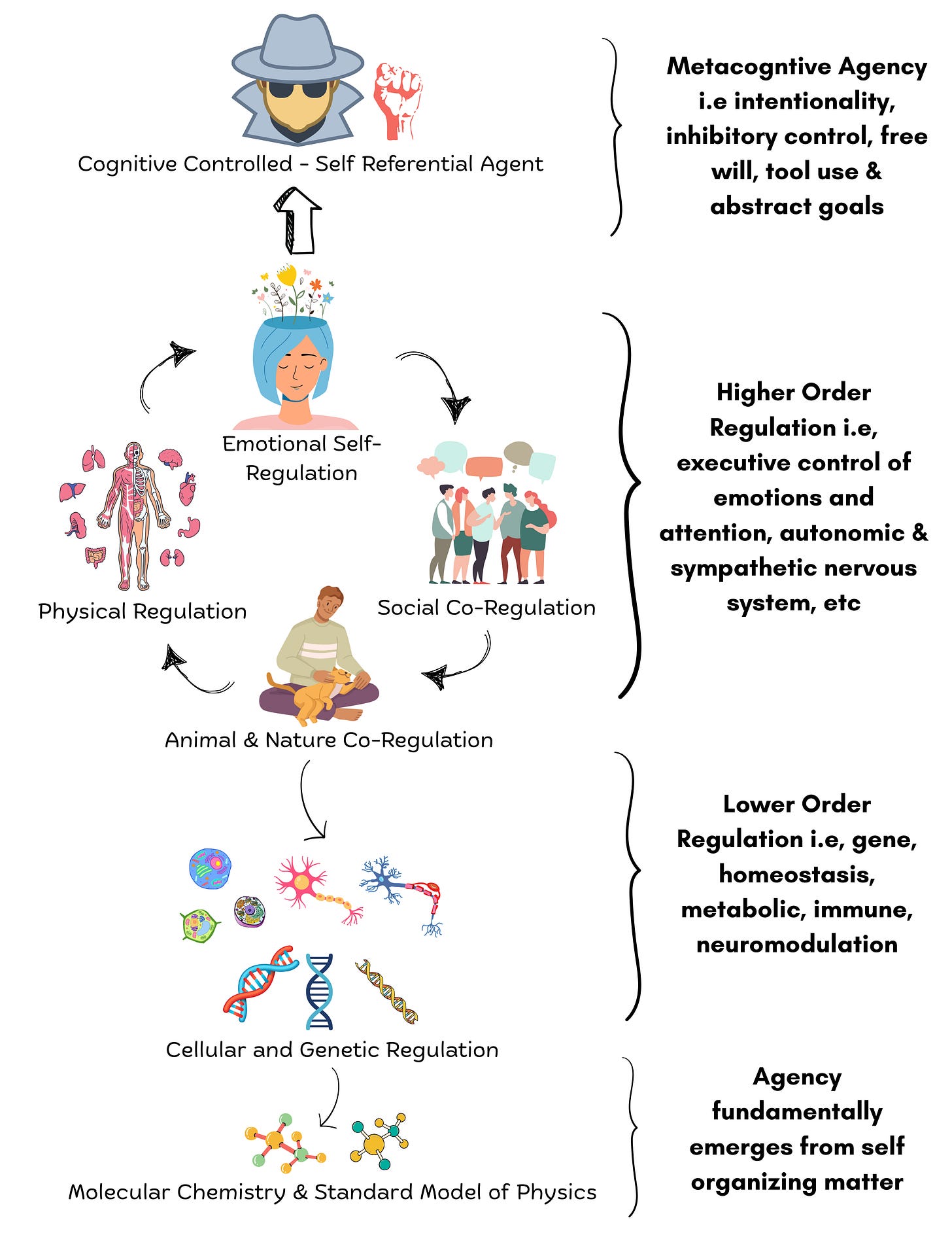

Agency as a Regulatory Phenomenon

Human agency, our capacity for self-directed, intentional action, emerges from complex regulatory systems refined through millions of years of evolution. Unlike AI systems that operate through pattern recognition and statistical inference, human agency is born from the intricate modulation between internal regulatory goals and external environmental pressures. This regulatory framework extends from cellular mechanisms to social interactions, creating a multidimensional system that necessitates grounded priors and computational algorithms that develop these regulatory systems into a coherent self-agent with emergent subjective goals.

Agency emerges from these biological imperatives that drive behavior and decision-making. Our goals are not arbitrary constructs but necessary components of our biological existence as organisms seeking homeostasis and survival, ultimately grounding the foundation for adaptive behavior, meaningful action, and purpose.

While AI can process vast amounts of data and identify patterns, it lacks the biological imperative that gives rise to genuine agency. Training data and reinforcement learning algorithms, sophisticated as they may be, do not constitute inherent systematic goals comparable to those driving human behavior. This biological foundation creates a fundamental distinction between human intelligence and artificial systems.

The Necessity of Internal Regulation

Regulation is a fundamental concept that operates across multiple levels of biological organization, from cellular processes to complex psychological functions. While the specific mechanisms vary across disciplines, regulation broadly refers to the maintenance of stability, balance, and functionality through monitoring and adjustment processes.

Humans possess intrinsic regulatory goals that precede and inform our interaction with the external world. These internal benchmarks allow us to evaluate experiences, prioritize actions, and adapt strategies in pursuit of both immediate needs and abstract aspirations.

The biological imperative of regulation doesn't merely influence our behavior, it constitutes the essential scaffolding from which all higher-order goals necessarily emerge.

Without this foundation of internal regulation, which includes the continuous biological orchestration occurring below conscious awareness, the very concept of agency becomes incoherent. Agency isn't simply diminished without biological imperatives; it fundamentally dissolves, as there would remain no intrinsic motivation driving adaptation, no inherent value system dictating priorities, and no embodied reference point from which to evaluate outcomes.

This biological grounding explains why disembodied intelligence, regardless of computational power, cannot achieve agency when these systems lack the fundamental substrate of regulatory needs from which purposeful action organically emerges.

Integration Across Levels

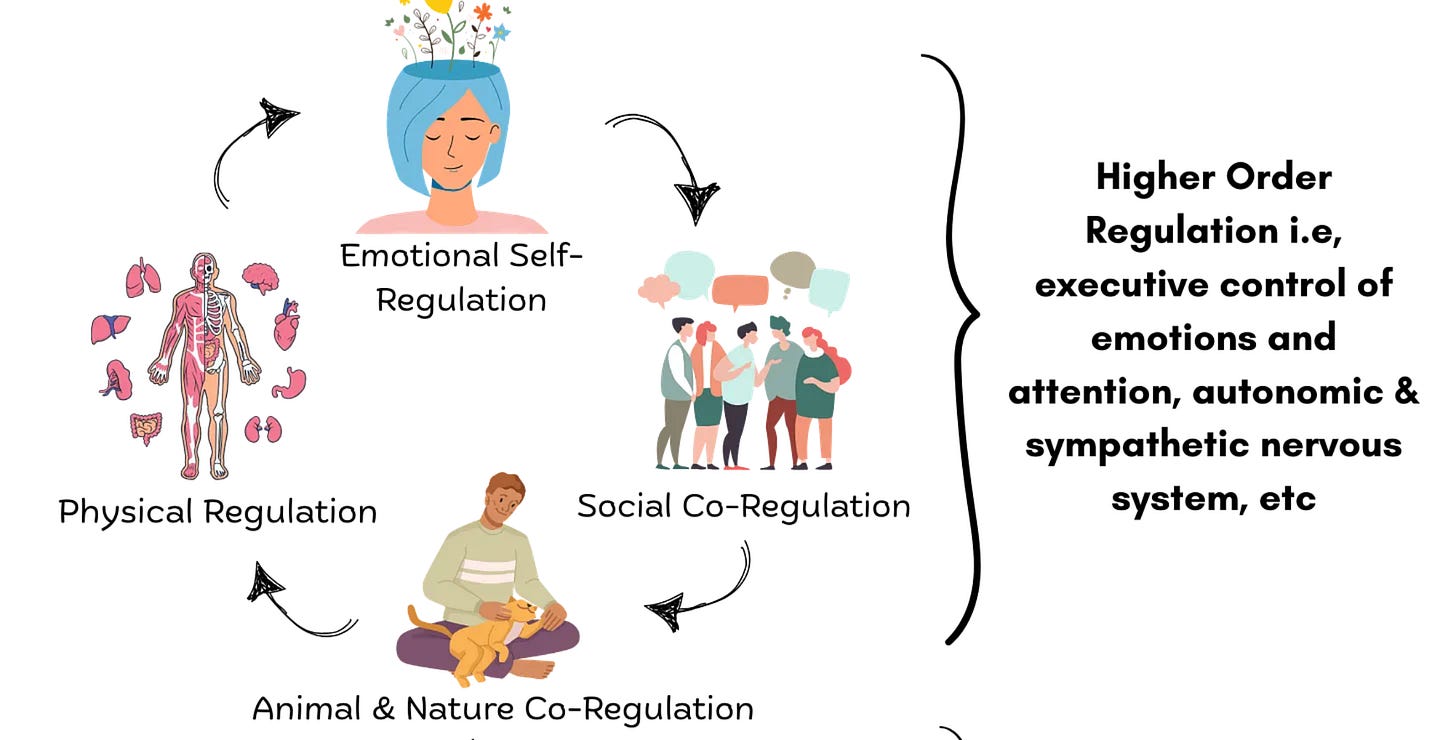

What makes regulation particularly significant is how these systems interact across levels. For example, emotional regulation involves biological processes (autonomic nervous system responses), neurological mechanisms (prefrontal cortex inhibition of amygdala activity), and psychological strategies (cognitive reappraisal).

This multi-level integration creates the complex regulatory networks that underpin human cognition, emotion, and behavior, generating the emergence of capacities like agency, consciousness, and the subjective sense of self. Unlike current AI systems, these biological regulatory networks create inherent goals tied to survival and thriving, producing the necessary conditions for biological agency to emerge.

The Tripartite Foundation of Human Agency

Human agency develops across three critical regulatory domains:

Physical Regulation: Our bodies maintain homeostasis through countless feedback loops, from temperature regulation to hormone balance. These systems create the biological foundation for higher-order regulation.

Psychological Regulation: Through development, we acquire inhibitory mechanisms, emotional regulation capacities, and decision-making frameworks that help navigate complex choices.

Social Regulation: Our interactions with others foster empathy, social norms, and cooperative behaviors that constrain and guide our actions within communities.

These domains don't operate in isolation but form an integrated regulatory network that gives rise to the unique phenomenological experience of human agency. As beings who must maintain internal equilibrium while navigating complex social environments, our agency emerges as a solution to the fundamental challenge of surviving and thriving.

The Limits of Artificial Agency

Current AI systems, including advanced language models, lack key elements that make human agency possible:

Embodied Experience: AI has no intrinsic regulatory needs stemming from physical embodiment. AI cannot develop the fundamental regulatory mechanisms that drive human motivation and action without some coherent boundary that requires homeostasis.

Recursive Compositionality: While AI can simulate pattern recognition, it lacks true recursive compositionally: the ability to build upon its own abstractions in an open-ended way. LLMs are excellent when we can drive their goals using our intentions and thought processes, but they lack any self-referencing functionality to compose their abstractions recursively.

Attachment and Social Development: Human agency develops through attachment relationships that regulate our emotions and behaviors from infancy. These relationships create internal working models that shape how we relate to ourselves and others throughout life. AI cannot experience attachment in this biologically grounded way.

Intelligence Does Not Equal Agency

A critical distinction must be made between intelligence and agency. Training data or reinforcement learning, while powerful techniques for developing intelligence and reasoning capabilities, do not establish inherent systematic goals within these systems.

It is crucial to distinguish between intelligence and agency. Intelligence, the ability to acquire and apply knowledge, does not automatically confer agency. Human agency requires cognitive control: the ability to monitor conflicts between incoming experiences and established goals, to verify that actions align with intentions, and to adjust behavior accordingly.

Biological cognitive control abilities enable conflict monitoring and verification of experiences against internal sub-goals. Humans evolved these tremendous cognitive control capabilities precisely because they form the basis of our adaptive behavior. This mechanism is biologically mapped to a neural network identified as the executive control of attention, an architecture entirely absent in current large language models.

The limitations of AI become apparent when examining both learning and reasoning capabilities. Without a mechanism for conflict monitoring, AI systems lack the expectations or goals against which to verify new information. There exists no subjective grounded framework through which to process information or learn in a meaningful way. Most crucially, AI has no intrinsic reason to update its world model and has no stake in the accuracy of its representations.

The Importance of Human Attention Regulation

Human attention functions as a sophisticated network of composition and correction, continuously updating our world model through integrated neural mechanisms. This network allows us to detect conflicts between expectations and reality, resolve inconsistencies, and adapt our understanding accordingly.

The architecture of attention in humans serves multiple functions simultaneously, detecting novelty, resolving conflicts, updating mental models, and redirecting cognitive resources. These capabilities emerge from our biological imperative to maintain internal regulation in a complex and changing environment. The integration of these functions creates a cohesive system of agency that current AI architectures simply do not even try to implement.

Why Does AI Lack Agency?

The Absence of Intrinsic Regulatory Mechanisms

The critical shortcoming in artificial intelligence systems is not merely a technical limitation but a fundamental architectural absence. Unlike biological organisms, which have evolved sophisticated regulatory networks to maintain homeostasis and respond to environmental challenges, AI systems operate without inherent expectations or goals against which to verify experiences.

Without this subjectively grounded framework for processing information, there is no intrinsic drive, no free energy to minimize that compels an AI to update its world model.

The Necessity of Subjective Grounding

Biological organisms possess a subjectively grounded framework for processing information that emerges from their embodied existence and the evolutionary imperative to survive and reproduce. This provides the context within which all information is evaluated, creating a natural hierarchy of relevance and meaning. Without such grounding, AI systems process information in a fundamentally different manner, one that lacks the essential attention and motivational architecture that drives biological cognition.

The Free Energy Principle and Predictive Processing

From a neuroscientific perspective, biological cognition can be understood through frameworks such as the Free Energy Principle proposed by Karl Friston. According to this principle, biological systems are driven to minimize prediction errors and, by extension, minimize their internal entropy. This creates an intrinsic drive to update internal models of the world when they fail to predict sensory inputs adequately. The discrepancy between predicted and actual sensory input, surprise or prediction error, generates the free energy that compels model updating.

AI systems, in contrast, lack this intrinsic thermodynamic imperative. While they can be programmed to optimize specific objective functions, they have no inherent need to minimize surprise or maintain internal coherence. Their parameters are updated through externally imposed training processes rather than an internal drive to resolve prediction errors.

The Role of Embodiment in Cognitive Conflict Resolution

The embodied nature of biological cognition further amplifies this distinction. When expectations are violated, human conflict monitoring systems are entangled with physiological responses such as increased heart rate, hormonal changes, and shifts in attentional resources. These bodily responses create the motivational urgency behind conflict resolution and model updating. They transform abstract prediction errors into meaningful signals that demand attention and response.

AI systems, existing as disembodied computational processes, cannot experience this embodied dimension of conflict. Their "attention" mechanisms, sophisticated as they may be, are fundamentally different from the biologically grounded attentional systems that have evolved to prioritize information relevant to survival and well-being.

The Dynamic Nature of Human Attention

Human attention functions as a sophisticated network of composition and correction, constantly updating our understanding of the world based on new information and how it aligns with our goals and expectations. This is a necessary computational process and a deeply integrated aspect of our conscious experience. This attentional system serves multiple regulatory functions simultaneously:

Salience Detection: Identifying information relevant to biological and psychological needs, automatically prioritizing potential threats or survival opportunities.

Goal-Directed Filtering: Selectively attending to information that supports current objectives while inhibiting irrelevant distractions.

Error Detection and Resolution: Monitoring for discrepancies between expected and actual outcomes, triggering corrective processes when misalignments occur.

Metacognitive Awareness: The capacity to reflect on and modify our own attentional processes based on their effectiveness in achieving our goals.

This integrated attentional system creates a continuous feedback loop between perception, cognition, and action the one that is fundamentally grounded in our biological imperatives and subjective experience. The conscious awareness that emerges from and supervises this process allows for the flexible adaptation of attention based on changing goals and circumstances.

While AI systems can implement algorithmic approximations of attention, such as the transformer architectures used in large language models, they lack the intrinsic connection to biological regulation, subjective experience, and goal-directed behavior that characterizes human attention. Computational attention in AI remains a functional mechanism rather than an integrated aspect of a goal-driven system.

Implications for Artificial General Intelligence

This analysis suggests that the path toward artificial general intelligence (AGI) with actual agency would require more than increasingly sophisticated algorithms or larger training datasets. It would necessitate the development of systems with intrinsic regulatory goals, systems that experience an inherent need to maintain internal coherence and minimize prediction errors. Such systems would need to possess something analogous to the homeostatic drives that create the foundation for biological agency.

Without addressing this fundamental gap, AI systems will remain powerful tools that extend human capabilities rather than autonomous agents with their own intrinsic motivations and purposes. While they may simulate aspects of agency through increasingly sophisticated algorithms, they will lack the essential regulatory foundation that any level of agency requires.

AI to Complement Human Potential

Rather than viewing this as a limitation, we might better understand it as defining the complementary relationship between human and artificial intelligence. The absence of intrinsic regulatory mechanisms in AI systems makes them ideally suited to extend human agency without competing with or replacing it. By recognizing this distinction, we can develop AI systems that augment human capabilities, which elevate the need for human agency as the goal aligner in AI products.

The Irreducibility of Human Agency

As we develop increasingly advanced AI systems, we must maintain a perspective on the distinctive nature of human agency. The capacity to integrate physical needs with metaphysical aspirations, to regulate our internal states while pursuing external goals, remains a uniquely human attribute.

This understanding should guide our approach to AI development and the use of AI technology as a collaborator that enhances our inherent capabilities. While future developments may attempt to bridge these architectural differences, perhaps finding ways to integrate conflict monitoring and subjective frameworks into AI systems, the fundamental biological grounding of human agency suggests an irreducible distinction will remain until significant discoveries are made. By recognizing the irreplaceable reality of human agency, we can collectively guide technological implementations toward rational and pragmatic expectations.